Arata Jingu | 神宮 亜良太

I am a 4th-year Ph.D. student at Human Computer Interaction Lab at Saarland University and a Google PhD fellow.

My PhD topic is Human-AI collaboration in designing physical properties of entire XR scenes. Research intern at Google on AR/AI in summer 2025. My work has been published/exhibited at top-tier venues (e.g., ACM CHI/UIST, Laval Virtual) and featured on mainstream media (e.g., Yahoo Finance, Business Today, Engadget). Recipient of Google PhD Fellowship, Funai Overseas Scholarship.

CV | Google Scholar | LinkedIn | GitHub | X | note | Email: jingu@cs.uni-saarland.de

Main Publications

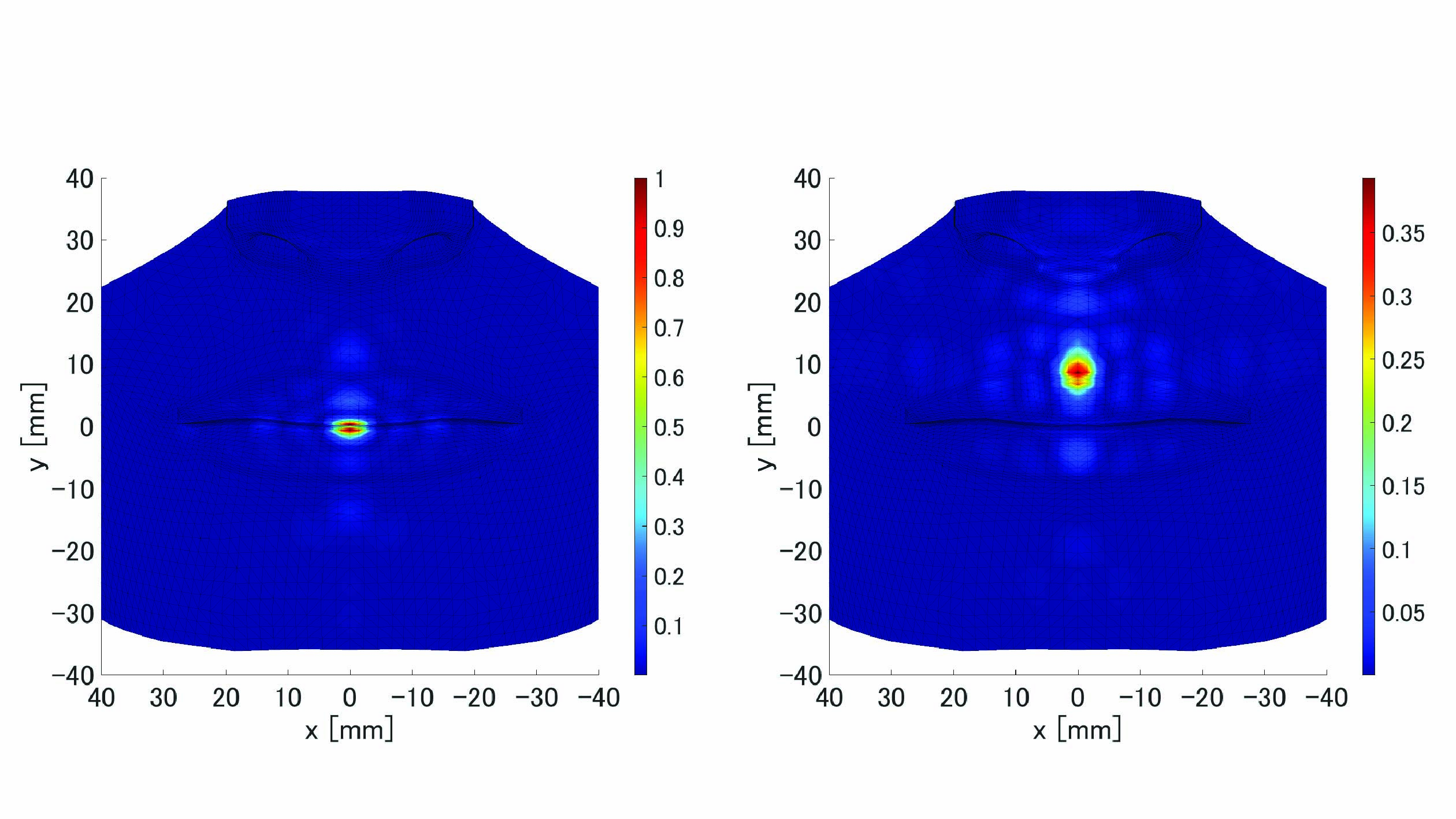

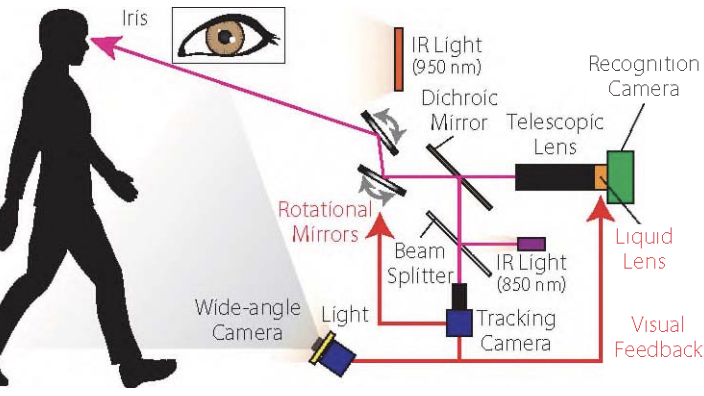

Scene2Hap: Combining LLMs and Physical Modeling for Automatically Generating Vibrotactile Signals for Full VR Scenes

Arata Jingu, Easa AliAbbasi, Paul Strohmeier, Jürgen Steimle

Arxiv (under review)

More Publications

Awards

- 2023.11 Best Demo Honorable Mention Award, ACM UIST'23

- 2022.03 2nd Best Dean's Award, Master's Thesis at the University of Tokyo

- 2019.11 Unity Award, 27th International Virtual Reailty Contest (IVRC)

- 2019.11 Laval Virtual Award, 27th International Virtual Reailty Contest (IVRC)

Funding

- 2024.10 Google PhD Fellowship: 2-year funding

- 2023.06 ERC Proof of Concept Grant: 1-year funding

- 2022.10 SIGCHI Gary Marsden Travel Award: $3.2K

- 2022.05 Funai Overseas Scholarship: 2-year funding

- 2018.03 Mercari BOLD Scholarship for SXSW 2018

Exhibitions

Be in"tree"sted in | きになるき

International Virtual Reality Contest 2019, Tokyo (Laval Virtual Award, Unity Award)

Laval Virtual 2020, Virtual

VR experience of becoming a tree and spending the four seasons.

(w/ three co-creators, my part: VR/Server)

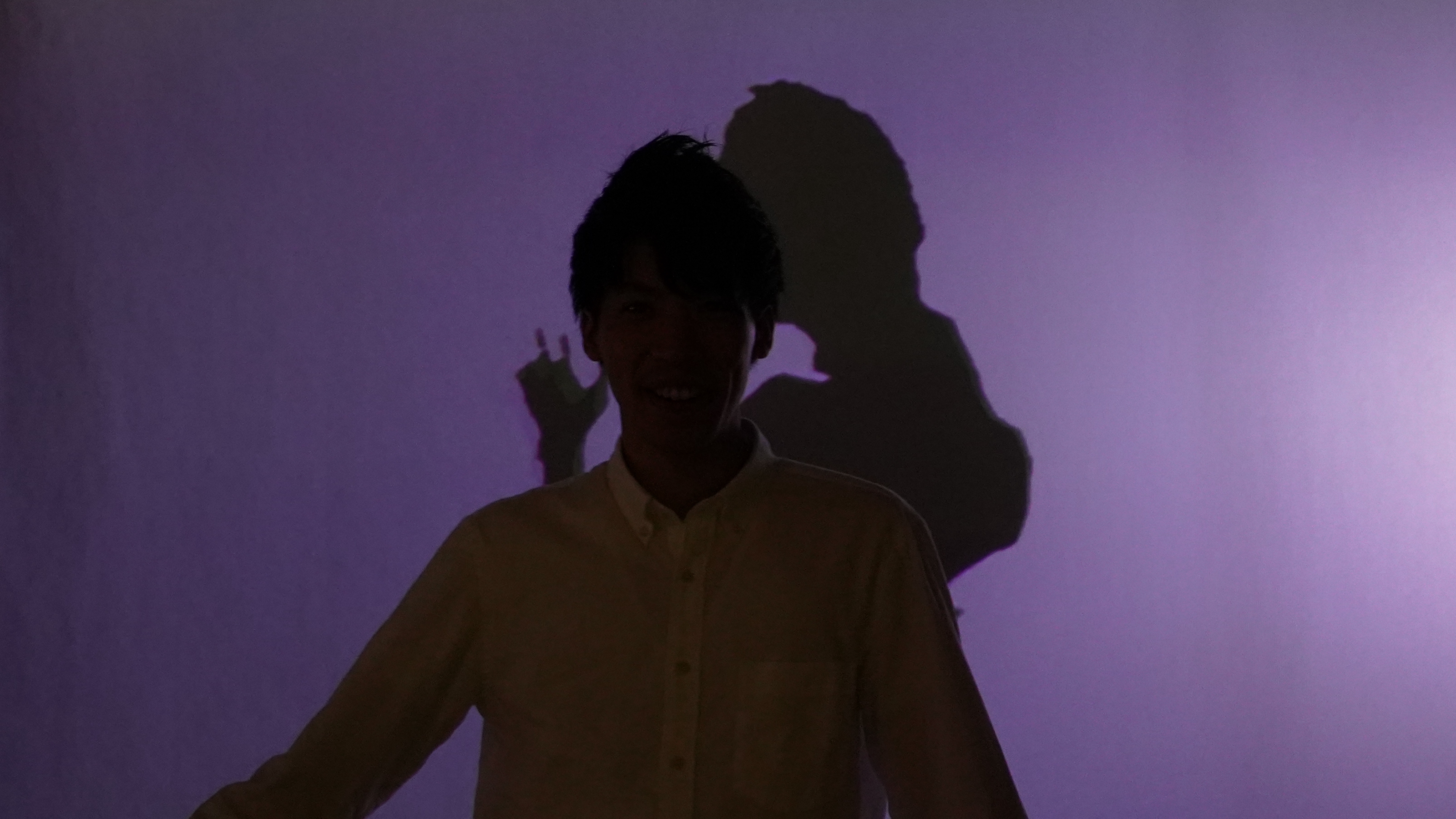

Autonomous Shadow | 自律する影

IIIExhibition 2018 "Dest-logy REBUILD", Tokyo

Your shadow moves autonomously.

(w/ two co-creators, my part: Python/OpenCV/Shadow Image Processing/OSC)